WOW THAT WAS A LOT OF WORK PUT INTO 4 DAYS (Texturizing)

So, now that we have a system let's discuss for a bit the plausibility of this process as well as what I ended up doing it. The framework of the program remained the same, keywords were placed and are checked by the Speech recognition software and the triggering events send messages into VCV to affect the music being played. It is here that we have to discuss my first conceptual issue, from previous post I've shared the amount of work and modules that had to be put in place to construct a mere 4 chord harmony (there are most likely better ways to do it but i digress) and creating a single voice melody proved to be equally as difficult. Having multiple voices to control a single oscillator (or worse, several single voice oscillators to be controlled) is a really inefficient way to create music in this system, and since most tools and tutorials specialize in constructing and developing textures through single voices (which mine technically is but since it's coming from multiple sources it registers as a polyphonic value) creating textures and utilizing modules was very hard. After a loooooot of playing around I couldn't find a lot of satisfying ways to generate textures that would successfully use more modules and adapt to the mood in the process.

This does not mean that it cannot be done, far from it, VCV is extremely flexible but works best as products of their own interactions, a system that would use only a couple of modules to generate signals and then re sends those signals to add up to the sound is what this type of software is made to do, it was then a little overzealous on me to try to apply general rules for music creation to a system that adapts better to a different approach to music making.

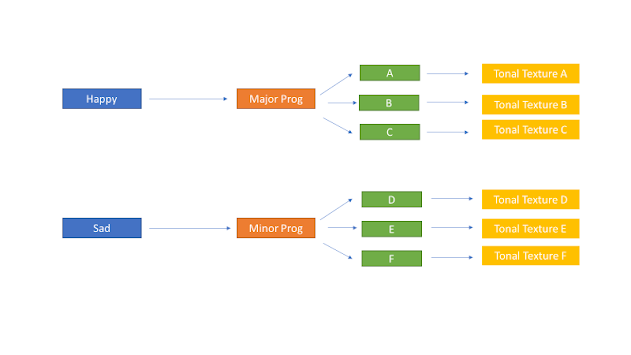

It is here that I admit that I couldn't construct all the moods that I had defined. After a lot of work, trial an error I managed to have all 3 moods for the minor Progression, but only 1 mood for the Major Progression, since the patch starts in the Major Progression, the default and unedited values of the patch, that account for the "Calm" mood, are present and there is no changes being transmitted by the code to influence them, apart from the mentioned bpm, and Gain values.

- Tense (60bpm) - Dry (0.34-0.2)[4,0] - Wet (0.5 - 0.75)[5,7] - PreD(0.1 - 0.3)[6,3] - Clock(2 - 8)[6,67]

- Worry (100bpm) - Dry (0.34-0.75)[4,7] - Wet (0.5 - 0.2)[5,0] - PreD(0.1)[6,0] - Clock(2 - 8)[6,80]

- Somber (100bpm) - Vol0,2 (127-0)[0,0] - Vol2 (120-127)[]

Comments

Post a Comment